MultiARC

The project MultiARC is engaged with developing an interactive and multimodal-based augmented reality system for computer-assisted surgery in the context of ear, nose and throat (ENT) treatment. The project is supported by the Federal Ministry of Education and Research (BMBF).

![BMBF_CMYK_Gef_M [Konvertiert]](https://www.3it-berlin.de/wp-content/uploads/2019/04/BMBF_gefoerdert_2017_en-300x206.jpg)

PROJECT DETAILS

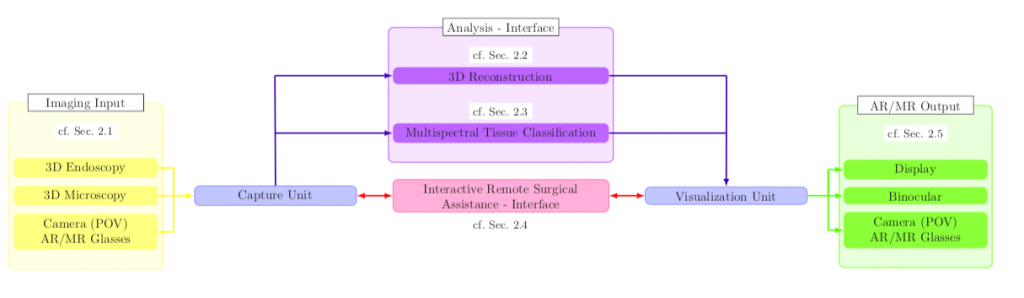

The project MultiARC is engaged with developing an interactive and multimodal-based augmented reality system for computer-assisted surgery in the context of ear, nose and throat (ENT) treatment. The proposed processing pipeline uses fully digital stereoscopic imaging devices, which support multispectral and white light imaging to generate high resolution image data, and consists of five modules. Input/output data handling, a hybrid multimodal image analysis, as well as a bi-directional interactive augmented reality (AR) and mixed reality (MR) interface for local and remote surgical assistance are of high relevance for the complete framework.

The hybrid multimodal 3D scene analysis module uses different wavelengths to classify tissue structures and combines this spectral data with metric 3D information. Additionally, we propose a zoom-independent intraoperative tool for virtual ossicular prosthesis insertion (e.g. stapedectomy), guaranteeing very high metric accuracy in sub-millimeter range (1/10 mm). A bi-directional interactive AR/MR communication module ensures low latency, while providing surgical information and avoiding information overload. Display agnostic AR/MR visualization of our analyzed data can be transmitted and shown synchronized inside the microscopes digital binocular, on 3D displays or any connected head-mounted-display (HMD). In addition, the analyzed data will be enriched with annotations by involving remote clinical experts using AR/MR.

Furthermore, an accurate registration of preoperative data to the current surgical scene is guaranteed because of the entire digital processing chain. The benefits of such a collaborative surgical system are manifold and will lead to a highly improved patient outcome through simplified tissue classification and reduced surgical risk.

Input:

As input signals, three-dimensional digital microscopy and endoscopy is used. In addition, various light sources are used for the illumination of the surgical scene to capture tissue structures in different wavelengths.

To expand the surgical scene via remote interaction, the system will make use of various 2D and 3D input devices and generate a time-synchronized augmented and mixed reality visualization.

Output:

For the output module, it has to be investigated to what extent it is possible to calculate an accurate high-resolution image by use of correlations between position, time and spectral range on all visualization devices. Nonetheless, the format agnostic imaging pipeline allows us an adaptive presentation of the underlying mutual information. Overall information related to the ongoing procedure might be shown on any external display. In addition, the binocular or HMDs enable interactions via specific virtual objects for the communication between surgeons or for video streaming including audio comments and annotations to lecture rooms for trainee surgeons or medical students. Besides, it will be investigated whether stereoscopic displays and what type of AR/VR devices are predestined for remote telemedicine and teaching services.

Analysis:

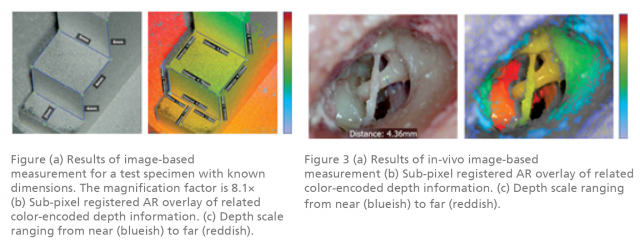

For 3D reconstruction and surgical AR/MR applications a calibration of the stereoscopic system is crucial, in order to get a real world representation of the surgical scene and the underlying optical system. Therefore, a zoom-independent calibration scheme is defined, allowing image-based measurements resulting in metric and 3D data representation. This 3D data serves as a basis for high-level surgical AR/MR assistance functions for different surgical applications like measurements and size-correct prosthesis visualization as well as for tissue classification.

This project follows the idea of extracting multispectral tissue data, combining wide-band LEDs as an illumination unit and a RGB- camera as three-channel acquisition device. The scene will be illuminated using all available LEDs consecutively and acquire images synchronized to the illumination sequence. This illumination modality covers nearly the complete visual spectrum (approx. 400 nm to 780 nm).

For remote surgical assistance, it needs to be ensured that all surgeons can rely and work on consistent data. Furthermore, the entire pipeline needs to run in real-time without any noticeable latency. Meaning, the low-latency communication software needs to be able to handle interactive scribblings, large 3D data sets, preoperative data and vital parameters of the patient.

For any of these network scenarios, we address a low-latency interactive bi-directional communication user interface, which allows medicolegal consultations between surgical experts or detailed patient counseling before the intervention.

The project is divided into different work packages (WP 1 – WP 7), where each is dedicated to a single element of the processing chain. The project partners will contribute their expertise to different work packages and gather the results.

WP 1: Project management

WP 2: Imaging and data acquisition

WP 3: Analysis of measuring

WP 4: Multispectral tissue analysis

WP 5: Telepresence and teleconsultation

WP 6: AR/ VR visualization on different devices

WP 7: Evaluation and Integration into the clinical workflow

MultiARC @ Forum Digital Technologies

03 December 2019

Berlin, Germany

Expert Forum: AI in Minimally Invasive Surgery and Treatment

Keynote: Research in Digital Minimally Invasive Surgery at Fraunhofer HHI

Panel Discuission: The Role of AI in Digital Medicine and Surgery – Undererstimated or Overrated?

Find more information here.

MultiARC @ Miccai 2019

17-19 October 2019

Shenzhen, China

MultiARC succesfully participated at the 22nd International Conference on Medical Image Computing and Computer Assisted Intervention in Shenzhen, China. The project contributed to the Endoscopic Vision Challenge from Intuitive Surgical and won the Runner-Up Award in the category ‘Lowest Mean Error’.

For more information, please click here.

MultiARC @ 3IT Summit

10 September 2019

Berlin, Germany

Talk: MultiARC – AI Assisted Surgery

For more information, please click here.

MultiARC Network Meeting

08 September 2019

Hamburg, Germany

MultiARC @ EMB Conference

23-27 July 2019

Berlin, Germany

At the EMB Conference 2019, MultiARC presented a stereo-endoscopic framework that allows real-time capable image-based measurements of anatomical structures. A 3D endoscope is used to reconstruct the oral cavity to measure orofacial cleft of five pediatric patients.

Endoscopic Single-Shot 3D Reconstruction of Oral Cavity

J.-C. Rosenthal, E. L. Wisotzky, P. Eisert, and F. C. Uecker

For more information, please click here.

MultiARC @ CARS 2019

18-21 June 2019

Rennes, France

MultiARC was represented in a number of talk sessions at the 33rd International Conference of Computer Assisted Radiology and Surgery.

- Talk in Session Joint ISCAS & CAD: Artificial Intelligence for Computer-aided Dagnosis and Minimally Invasive Therapy

“A multispectral snapshot camera method to analyze optical tissue characteristics in vivo”

- Talk in Session Mixed reality for Surgical Simulation, Training and Education

“An AR-Solution for Education and Consultation during Microscopic Surgery”

- “Introducing a zoom-independent calibration target for augmented reality applications using a digital surgical microscope”

For further information, please click here.

MultiARC @ IEEEVR 2019

23-27 March 2019

Osaka, Japan

MultiARC was presented at the 26th IEEE VR Conference on Virtual Reality and 3D User Interfaces at the IEEE VR Workshop on Applied VR for Enhanced Healthcare (AVEH).

Interactive & Multimodal-based AR for Remote Assistance using a Digital Surgical Microscope

E. L. Wisotzky, J.-C. Rosenthal, P. Eisert, A. Hilsmann, F. Schmid, M. Bauer, A. Schneider, F. C. Uecker

Fraunhofer HHI presented an interactive and multimodal-based augmented reality system for computer-assisted surgery in the context of ear, nose and throat (ENT) treatment. The proposed processing pipeline uses fully digital stereoscopic imaging devices, which support multispectral and white light imaging to generate high-resolution image data, and consists of five modules. Input/output data handling, a hybrid multimodal image analysis and a bi-directional interactive augmented reality (AR) and mixed reality (MR) interface for local and remote surgical assistance are of high relevance for the complete framework.

The hybrid multimodal 3D scene analysis module uses different wavelengths to classify tissue structures and combines this spectral data with metric 3D information. Additionally, we propose a zoom-independent intraoperative tool for virtual ossicular prosthesis insertion (e.g. stapedectomy) guaranteeing very high metric accuracy in sub-millimeter range (1/10 mm). A bi-directional interactive AR/MR communication module guarantees low latency, while consisting surgical information and avoiding informational overload. Display agnostic AR/MR visualization can show our analyzed data synchronized inside the digital binocular, the 3D display or any connected head-mounted-display (HMD). In addition, the analyzed data can be enriched with annotations by involving external clinical experts using AR/MR and furthermore an accurate registration of preoperative data. Such a collaborative surgical system will lead to a highly improved patient outcome through an easier tissue classification and reduced surgery risk.

For further information, please click here.

MultiARC @ SPIE Medical Imaging 2019

16-21 February 2019

San Diego, California, United States

SPIE Medical Imaging focuses on the latest innovations found in underlying fundamental scientific principles, technology developments, scientific evaluation, and clinical application. The symposium covers the full range of medical imaging modalities including image processing, physics, computer-aided diagnosis, perception, image-guided procedures, biomedical applications, ultrasound, informatics, radiology and digital pathology, with an increased focus on fast emerging areas like deep learning, AI, and machine learning.

Validation of two techniques for intraoperative hyperspectral human tissue determination

Eric L. Wisotzky, Fraunhofer Institute for Telecommunications Heinrich Hertz Institute, HHI (Germany) and Humboldt-Univ. zu Berlin (Germany); Benjamin Kossack, Fraunhofer Institute for Telecommunications Heinrich Hertz Institute, HHI (Germany); Florian C. Uecker, Philipp Arens, Steffen Dommerich, Charité Universitätsmedizin Berlin (Germany); Anna Hilsmann, Fraunhofer Institute for Telecommunications Heinrich Hertz Institute, HHI (Germany); Peter Eisert Fraunhofer Institute for Telecommunications Heinrich Hertz Institute, HHI (Germany) and Humboldt-Univ. zu Berlin (Germany)

For further information, please click here.

MultiARC @ Smart Data Forum

03 December 2018

Berlin, Germany

SIBB e.V. presents a monthly talk on Health-IT. Under the topic of Image Processing the researchers Jean-Claude Rosenthal and Eric Wisotzky of the Fraunhofer HHI gave a lecture on 3D analysis with measurement and hyperspectral analysis with tissue differentiation.

For more information, please click here.

| Type | Publication | Link |

| Journal Paper |

E.L. Wisotzky, P. Arens, S. Dommerich, A. Hilsmann, P. Eisert, F.C. Uecker (2020): Determination of the optical properties of cholesteatoma in the spectral range of 250 to 800 nm, Biomedical Optics Express, vol. 11, no. 3, pp. 1489-1500. |

[bib] [pdf] [url] |

| Conference Paper |

E. L. Wisotzky, B. Kossack, F. C. Uecker, P. Arens, S. Dommerich, A. Hilsmann, P. Eisert, Validation of two techniques for intraoperative hyperspectral human tissue determination, Proceedings of SPIE, 10951:109511Z, 2019. |

[bib] [pdf] [url] |

| Conference Paper |

E. L. Wisotzky, J.-C. Rosenthal, P. Eisert, A. Hilsmann, F. Schmid, M. Bauer, A. Schneider, F. C. Uecker, Interactive and Multimodal-based Augmented Reality for Remote Assistance using a Digital Surgical Microscope, IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 2019. |

[bib] [pdf] [url] |

| Journal Paper |

E. L. Wisotzky, F. C. Uecker, S. Dommerich, A. Hilsmann, P. Eisert, P. Arens, Determination of optical properties of human tissues obtained from parotidectomy in the spectral range of 250 to 800 nm, Journal of Biomedical Optics, 24(12):125001, 2019. |

[bib] [pdf] [url] |

| Conference Abstract |

J.-C. Rosenthal, A. Schneider, P. Eisert, Introducing a zoom-independent calibration target for augmented reality applications using a digital surgical microscope, Proc. Computer Assisted Radiology and Surgery (CARS), Rennes, France, 2019. |

|

| Conference Abstract |

E. L. Wisotzky, F. C. Uecker, P. Arens, A. Hilsmann, P. Eisert, A multispectral snapshot camera method to analyze optical tissue characteristics in vivo, Proc. Computer Assisted Radiology and Surgery (CARS), Rennes, France, 2019. |

[bib] [pdf] [url] |

| Conference Abstract |

A. Schneider, M. Lanski, M. Bauer, E. L. Wisotzky, J.-C. Rosenthal, An AR-Solution for Education and Consultation during Microscopic Surgery, Proc. Computer Assisted Radiology and Surgery (CARS), Rennes, France, 2019. |

[bib] [pdf] [url] |

| Conference Abstract |

J.-C. Rosenthal, E. L. Wisotzky, P. Eisert, F. C. Uecker, Endoscopic Single-Shot 3D Reconstruction of Oral Cavity, Proc. 41st IEEE Engineering in Medicine and Biology Conference (EMBC), Berlin, Germany, 2019. |

[bib] [pdf] [url] |

| Journal Paper |

E.L. Wisotzky, F.C. Uecker, P. Arens, S. Dommerich, A. Hilsmann, P. Eisert (2018): Intraoperative Hyperspectral Determination of Human Tissue Properties, Journal of Biomedical Optics, vol. 23, no. 9, SPIE. |

[bib] [pdf] [url] |